Overview

In order to stabilize, there needs to be control. In order to control, the state must be estimated in flight. In order to estimate the state in flight, the estimator must be designed and verified on the ground. In this post, I will give an overview for designing and testing the estimator in simulation so that it can eventually be put on the aircraft.

Filter Selection

In order to generate estimates of the aircraft state at each time step, I required a filter to combine the known state information, predicted behavior of the dynamics, and sensor measurements. I have two options to do this. The first is to use a model-based estimator that combines the expected dynamics of the aircraft and its inputs with the sensor measurements. In order to implement this, I would need an accurate aircraft model. Unfotunately, aircraft models, especially small aircraft like mine, are very difficult to model accurately. Even with an approximated form of the dynamics, separate parameters are very specific to the aircraft and difficult to find. For that reason, the filter I'm using now is a simple Navigation filter. This means that instead of predicting the states with a model, I instead propagated the measurements of acceleration and angular rate during the propagation step and used all other sensor measurements during the update step.

Once this navigation filter is working properly, I can use its outputs to generate an aircraft model and use that model for the full model-based filter. That process will be described in future posts.

The dynamics of this system, although simple, are nonlinear. This means that a simple Kalman filter would be inadequate. I am most familiar with Extended Kalman Filters (EKF) and Sigma Point Filters (SPF), also known as Unscented Kalman Filter (UKF). I chose to do most of this work with the EKF because I find it easier to debug.

States

The required states to describe any object in space, or at least the ones I cared about, are 3 elements describing its position in some coordinate system, 3 describing its velocity in some frame and coordinate system and 3 or 4 describing its attitude as a rotation between its own coordinate system and a reference coordinate system. To make matters simple, my reference coordinate system and frame was the North, East, Down (NED) frame with an origin at wherever the plane took off. I'm making the assumption the earth rotates and orbits slow enough relative to everything I'm doing that I can consider this frame inertially fixed. Therefore my position, velocity, and attitude all reference this frame and coordinate system. As the result, I will be using the term "frame" to mean the same thing as "coordinate system" moving forward because these are both now fixed. The position vectors are specified in the NED frame. The velocity vectors are specified in the body frame. The body frame for an aircraft and the NED frame are shown in this image:

To describe the attitude, we have two options: Euler angles and Quaternions. Euler angles are well defined for aircraft geometry with the roll axis being about x, the pitch about y, and the yaw being defined by the angle the projection of x-axis makes on the NE plane with the N axis. These are defined well in this

paper.

Quaternions are more complicated but do not suffer from gimbal lock among other advantages. Their major disadvantage is that they are far more difficult to understand, causing debugging to be difficult. In order to

get off the ground faster, I went with Euler angles to start and then swapped them for quaternions later.

This led to a state vector that looked like this:

$$

\begin{bmatrix}

N & E & D & u & v & w & \phi & \theta & \psi

\end{bmatrix}

$$

or for the case of quaternions:

$$

\begin{bmatrix}

N & E & D & u & v & w & q_1& q_2& q_3 &q_4

\end{bmatrix}

$$

Note that I am using the 1-4 indices for the quaternions with 4 being the scalar term. This is not the most common form but is consistent with the spacecraft attitude textbook I was referencing.

The state derivative function using Euler angles, $\dot{\vec{x}}=f(\vec{x},\vec{u})$:

(apologies for the change in the equation background color. Blogger and Latex aren't as friendly as I'd hoped)

Here, ${}^{I}C^{B} $ represents the transition matrix between the body and inertial (NED) frame:

$$ {}^{I}C^{B} = R_z(\psi)R_y(\theta)R_x(\phi)$$

and $X$, $Y$, and $Z$ are the accelerometer measurements in the body frame.

The equations for quaternions are similar but take longer to write out so I'm leaving them out for now.

Measurements

The measurement vector is made up of the information from the original sensors, though some of it passed through some preprocessing before entering the vector itself.

GPS Conversion and Covariance

The GPS provided Longitude, Latitude, Altitude, Speed, and Heading. The first step was to convert the Longitude, Latitude, and Altitude into NED. To do this I first had to define the conversion from longitude and latitude to ECEF position. I did this with the assumption of a slightly flattened earth and the help of this

site. From there, I had to find the NED frame at that point and define a transformation between ECEF and NED. Some calculations, validated

here, allowed me to define the transformation from ECEF to NED based on the first position measurement. All further measurements were converted to ECEF, then NED, then subtracted from the initial point to generate their own NED vector.

The 1 sigma values for the position were pulled from the GPS's documentation with a little bit added to each in order to account for the stale data (see the previous post). There was no apparent documentation for vertical accuracy so a guess was made here based on some testing data.

The speed and heading both had documented 1 sigma values. The measurement function for speed was $s_t = \sqrt{u^2+v^2+w^2}$. For heading, $h_t = atan2(v_{NED}(2),v_{NED}(1))$ where $v_{NED}$ is the velocity vector converted into the NED frame.

IMU Covariance and Bias

The IMU gave 9 separate pieces of information, Angular rate, Acceleration, and Magnetic field (3 axis each). Though there was some documentation on the accuracy of this information, I knew that the motor in the aircraft would cause each of these signals to have much higher noise levels than the documentation would specify. I posited that this was due to both vibration and emf from the rotating motor and high voltage 3 phase wires. However, through testing with and without the prop attached, it became clear that only the vibration caused the noise.

In order to find the noise in each sensor as a function of throttle, I constructed the following test:

I placed the aircraft between two cinder blocks so that it could not move and slowed stepped up the throttle while recording data. The following graph represents the findings:

As can be seen above, the 1 sigma noise from each IMU measurement increases with the throttle value. This data was then polynomially fit to a function and used as the measurement noise. This meant that the measurement noise was a function of an input parameter, something I'd heard mentioned but never dealt with before this.

However, I wasn't done with the IMU yet. Both the gyro and magnetometer had significant biases. Unfortunately, I spent an embarrassingly long time not realizing that the magnetometer had biases which caused many problems.

Once I'd understood this, finding those biases was simple. I performed one test where I set the aircraft down on a flat surface pointing directly north and let it take data for a few minutes. With this, I could find the gyro bias and also find the value the mag produced when aligned with the NED frame. This will be important shortly.

In order to find the magnetometer bias, I carefully and consistently rotated the aircraft 360 degrees while keeping it flat. The mean values from this rotation would serve as the bias in X and Y. I then rotated the aircraft by 90 degrees about the roll axis and repeated the process, giving me my Z bias.

Now I was able to describe the measurements of the gyro, $\vec{\omega}_t = \vec{\omega}_m - \vec{\omega}_{bias}$, and the accelerometer, $\vec{a}_t = \vec{a}_m$.

The measurement from the mag was $\vec{B}_t={}^{B}C^{I} (\vec{B}_m-\vec{B}_{bias})$.

EKF Overview

There are many sources for the derivation and operation of an EKF so I won't go over any of that. However, there are a variety of flavors of EKFs (Continuous-Continous, Continuous-Discrete, etc), so I'll describe my specific choice.

Though in the past I've used the Continuous-Discrete flavor, where I propagate my previous state through non-linear dynamics using a runge-kutta program, I knew that I was now writing something that I'd have to put on a microcontroller so I chose to use a Discrete-Discrete form. The two options were then the transition matrix $\Phi=e^{F\Delta t}$ where F is the jacobian of the derivative function wrt to the states or the linear approximation $I + F\Delta t$. I chose the latter to make it easier to implement on the microcontroller.

Note that in the quaternion attitude form, the quaternion was renormalized after every update.

Performance with initial flight data

The first time flying and recording data I hadn't yet integrated the barometer and had made a rookie mistake: I neglected to check the ranges on my sensors, particularly the accelerometer. The raw data from these sensors looked like this

The plot is not cut off. The data is cutoff. I'd forgotten to check the necessary ranges of the accelerometer. Since the altitude from the GPS was already poor and the accelerometer was giving cutoff data the down component of the state estimate varies wildly. In the plot below you'll see the estimates from all three axis of NED. The "Forward" and "Backward" refer to the estimates given by a Kalman filter (KF) as the "Forward" and the Kalman Smoother (KS) as "Backwards". In theory, the KS is supposed to give you better results than the KF because it has knowledge of both the future and the past. However, I'm using it here to demonstrate that the estimates vary significantly between the two filters even with the same data. I also recognize that displaying the covariance of the estimate is a better way of communicating this point but as I'm writing this I'm having issues with MATLAB so I will leave this plot for now.

Position Estimates of Initial Flight

A Minor Improvement

After integrating the barometer and setting the proper range for the accelerometer, I got the chance to fly again. This time leading to much better results across all states. However, before showing all of the fantastic plots, I first want to show one more problem and solution.

Velocity Estimates with heading problem

Above, we see the body frame velocity components. Though they generally look reasonable, there are occasional spikes that are clearly non-physical. For instance, at 58 seconds, the forward velocity becomes nearly 10m/s backwards. This is clearly wrong. The culprit is the heading. Though our attitude is represented in quaternions in the model, the heading is still an angle. Therefore if the aircraft is near the crossing between 0 and 360 degrees, the filter can measure the residual as nearly 360 or -360! To fix this, I applied the very simple logic:

if res_heading > pi

res_heading = res_heading - 2*pi;

elseif res_heading < -pi

res_heading = res_heading + 2*pi;

end

where res_heading is the residual of the heading.

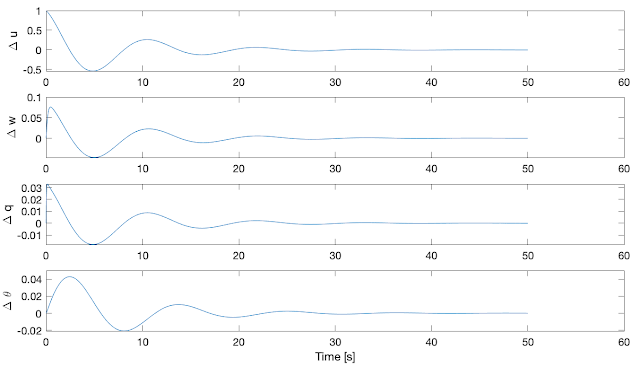

Performance with Better Flight Data

This small change gave the following state estimates. I will describe the flight that produced them below.

Progress is making new mistakes. The mistake here was that I forgot to start recording data until about halfway into the flight. That is why you see the Down position start at 0 and then land at a larger value (lower altitude). This made getting the filter to work to be a bit trickier because I didn't have a well-known initial estimate like I would have if I'd taken off from the ground. However, with a good guess, the filter was able to converge on some behavior that looked correct to me.

The main sanity checks were a velocity that stayed above zero (flight speed appears to be about 10m/s), a down position that stays below the ground position, and v, w, $\phi$, and $\theta$ values that hover around zero. Though obviously there are some biases based on the aircraft and how I fly. There is also obviously a decent amount of variation of every state. Though I cannot concretely say the variation is correct, I will say that the way I flew this aircraft makes large variations in every state somewhat expected. With more practice, I hope to have some smoother flights.

I have intentionally not included wind into this model yet and am assuming groundspeed is airspeed. To help enforce this I flew on a very calm day. In the future with either a pito tube or a model-based estimator, I should be able to estimate the wind.

Places for Improvement and Next Steps

This filter is a huge step forward and took many intermediate steps that I did not mention here. To test each and every element I rotated the plane countless times, walked around a backyard, took the plane on a bike ride with me, and even took it for a data-collection drive at 10pm once. I'm very proud of what I'm seeing but know that there still could be some bugs. A filter like this might be riding a few measurements super hard which gives it good results but in reality has a bug somewhere else. The covariance of each estimate should be helpful in understanding that so I will look into that in the future.

The next steps are where things get good. I will use the data from this flight to find the estimated parameters of the aircraft. From there, I should be able to implement a model-based filter as well as do some proper model-based controller design. In the unfortunate case where the model-based filter does not work or is too computationally difficult for the microcontroller, I will still be able to implement this filter on the microcontroller. For reference, I believe that ArduPilot does not use a model-based filter and instead uses a filter similar to this for its estimation.

Thank you for reading. I hope you learned something. I sure did. Let me know if you find any mistakes or have any advice!